From R&D to RAG: Automating Error Analysis with On-Prem AI

Executive Summary

To solve internal challenges around AI data privacy and limited context windows, RUNNINGHILL championed a developer-led R&D initiative. The project produced a production-ready AI agent, “Project Raggedy Rag,” that runs 100% locally, ingests entire codebases for full context, and automates error analysis and ticket creation. This zero-cost POC, built with open-source tools in just 2-3 months of part-time effort, proves our deep expertise in RAG, Graph Databases, and secure, on-prem AI implementation.

The Internal Challenge

Like many forward-thinking software companies, our development team was eager to leverage AI assistants to accelerate debugging. However, we faced two critical blockers.

First, standard AI tools have limited context windows, making it impossible for them to analyze an entire, complex codebase to find a root cause. Second, using third-party AI APIs presented an unacceptable security risk, as it would require sending our proprietary source code to external servers. Our team was stuck manually analyzing error logs and creating tickets—a slow process that we knew AI could solve if implemented correctly.

The Solution: Innovation in Action

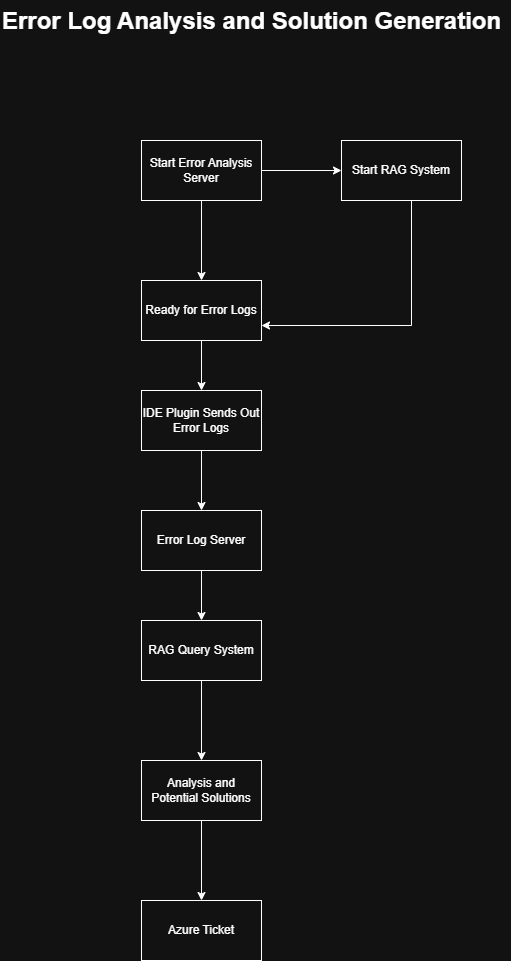

We built “Project Raggedy Rag,” an end-to-end AI agent that runs 100% locally on a developer’s machine. The system securely ingests an entire project codebase, giving it complete context. It then integrates with the developer’s IDE, watches for error logs in real-time, and automatically generates a detailed bug ticket in Azure DevOps with a step-by-step, AI-generated solution.

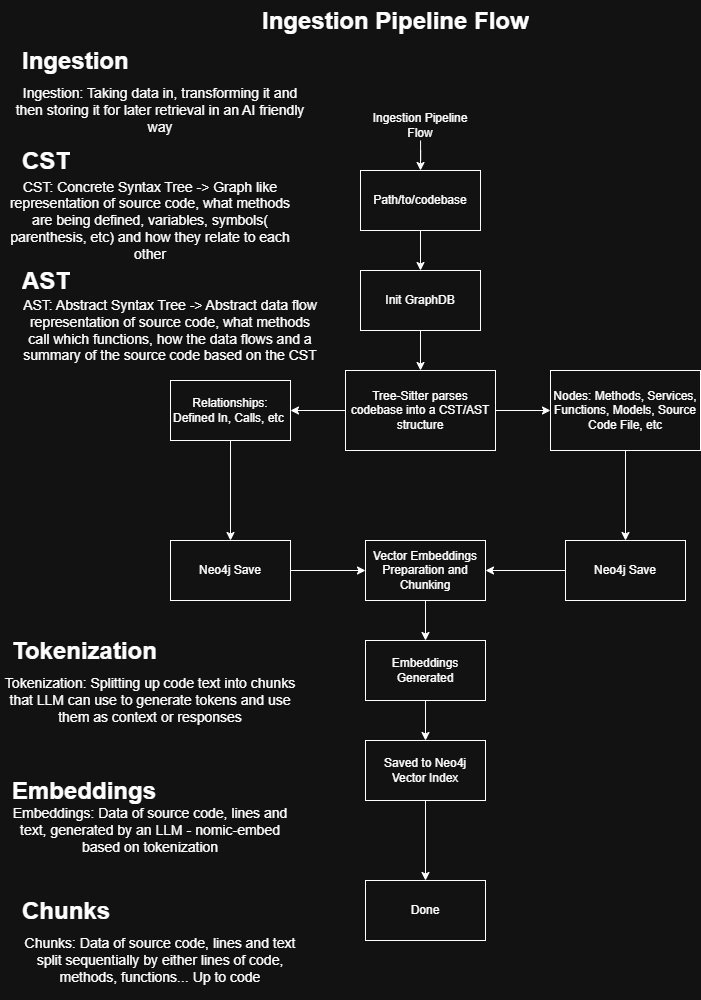

The agent’s intelligence comes from a sophisticated Retrieval-Augmented Generation (RAG) architecture. Here’s how it works:

- Ingestion: The pipeline uses Tree-sitter to parse the entire codebase into its fundamental structure (a Concrete Syntax Tree).

- Storage: This structure is stored in a local Neo4j database in two ways: as a Graph Database (to map method calls and code relationships) and as Vector Embeddings (to understand the code’s semantic meaning).

- Analysis: A JetBrains IDE plugin sends error logs via a Websocket to a local Python server in real-time.

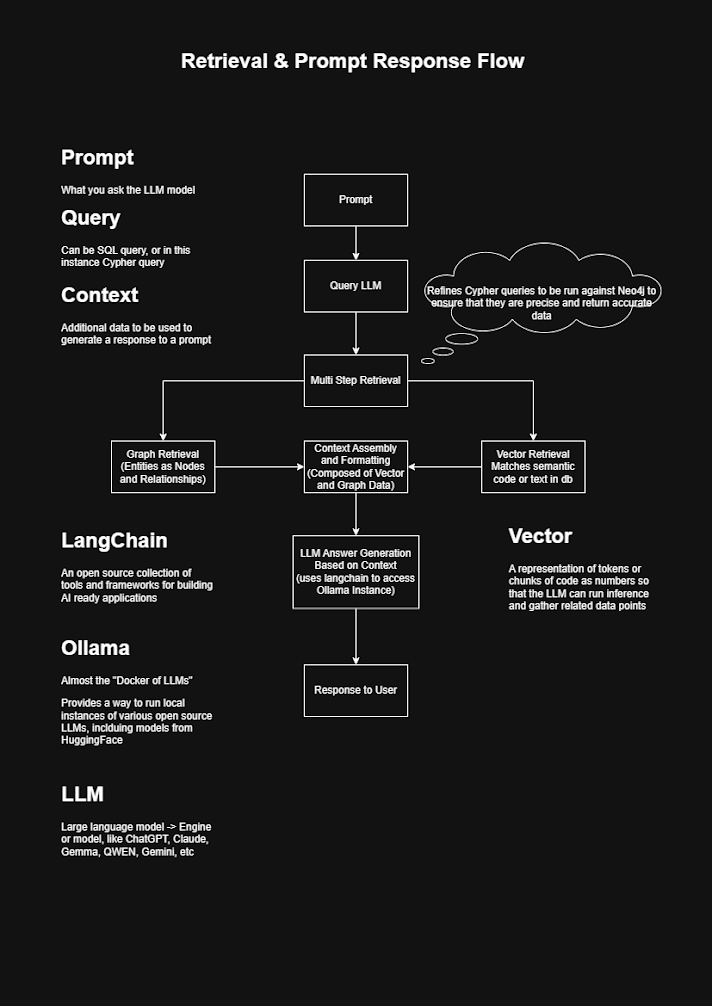

- RAG: The system queries its Neo4j database to retrieve the exact code snippets and relationship data relevant to the error.

- Generation: This highly relevant context is passed to a local LLM (Gamma 3:4B running on Ollama) to generate a precise solution .

- Action: The system automatically creates a new ticket in Azure DevOps, complete with the error log and the AI-generated fix.

The Build

This project was a testament to our culture of innovation and upskilling. It began as a grassroots R&D initiative by one of our developers, Thulane Ntozekhe, who dedicated 1-2 hours a day over 2-3 months to build the system from scratch.

The process was an agile sprint of rapid prototyping and deep technical problem-solving. It involved researching and benchmarking local LLMs (switching from Mistral to Gamma 3:4B for better performance), architecting a “multi-stage query optimization” system to improve the AI’s prompts, and debugging the ingestion pipeline to ensure 100% of the code was captured accurately. This dedication to solving the core problem resulted in a powerful, stable POC estimated to be reproducible in just 2-3 weeks of full-time work.

The Results

The project was a massive success, delivering tangible results and showcasing our team’s advanced capabilities.

“Thulane, thank you, that was… really nice. I especially like the slides and how you explained it… there’s quite a few terms you explained really well that people should be getting now or have a better understanding of at least.”

– Henk Bothma, Head of IT

Bulleted Key Metrics:

- $0 in Operational Costs: The entire system was built using a 100% free, open-source stack (Ollama, Gamma 3B, LangChain community, Neo4j community).

- 100% Data Privacy: The on-prem architecture ensures our proprietary source code never leaves the developer’s machine.

- Demonstrated R&D Efficiency: A single developer created a feature-complete AI agent POC in just 2-3 months of part-time R&D effort.

- Deep Technical Upskilling: Our team gained and demonstrated invaluable expertise in a cutting-edge AI stack, including RAG, Graph Databases, local LLMs, and multi-stage retrieval.

Why This Matters to Our Clients

This project is more than just a powerful internal tool; it’s a direct reflection of how we solve problems for our clients. The challenges of data privacy, system integration, and leveraging complex data are universal. Our success here demonstrates:

- We Build Securely: We don’t just talk about data privacy; we build for it at an architectural level. We can implement powerful AI solutions for you that run entirely within your own secure environment.

- We Are AI Experts: We don’t just use off-the-shelf AI. We have the deep technical expertise to build custom, end-to-end RAG systems from the ground up, selecting the right models (LLMs), databases (GraphDBs, VectorDBs), and frameworks (LangChain) for your specific data.

- We Are Proactive Problem-Solvers: We foster a culture of innovation where our developers are empowered to build solutions, not just wait for them. The expertise we gained in building this AI agent is the same expertise we now apply to help our clients develop their own intelligent automation solutions.

About RUNNINGHILL

Established in 2013, Runninghill Software Development is among South Africa’s fastest-growing BBBEE Level 2 companies within the Information Technology Sector. With over 50 full-stack developers specialising in finance, banking, fintech, and more, we help companies design, build, and ship amazing products.

We are passionate software developers who deliver world-class solutions using the best tools in the industry. We focus on technical team augmentation, consulting, and agentic solution design, and we partner with the best design agencies so we can focus on what we do best: technical excellence.

Our services include:

- Technical Consultation: We partner with your business to identify opportunities, solve complex challenges, and design strategic technology roadmaps that drive growth and digital transformation.

- AI-enabled Technical Team Augmentation: We provide skilled developers and technical experts who integrate seamlessly with your existing team to accelerate project delivery and fill capability gaps.

- Agents as a Service (AaaS): We design, build, and deploy intelligent AI agents that work autonomously within your business processes, creating a digital workforce that handles complex tasks while you focus on strategy.

Frequently Asked Questions (FAQ)

1. What was the main challenge this AI agent solved? The project solved two primary challenges: data privacy and context limitation. Using third-party AI tools requires sending proprietary source code to external servers, creating a security risk. Furthermore, most AI models have limited context windows and cannot analyze an entire codebase at once. This agent solves both problems by running 100% locally on the developer’s machine and using a RAG system to ingest the entire codebase for full context.

2. How is this agent different from a standard AI chatbot like ChatGPT? A standard chatbot only knows what’s in its general training data. This agent uses Retrieval-Augmented Generation (RAG), which means it first retrieves specific, relevant information from your private codebase before generating an answer. It also uses a Neo4j Graph Database to understand the complex relationships between different parts of the code (e.g., “method A calls method B”), giving it a much deeper and more accurate understanding than a standard chatbot.

3. What was the operational cost of this AI agent? The operational and licensing cost is $0. The entire system was built using a powerful, 100% free and open-source stack. This includes Ollama for running the LLM locally, Gamma 3:4B as the language model, and the community editions of LangChain and Neo4j. This demonstrates our ability to build powerful solutions without expensive, recurring vendor costs.

4. Can RUNNINGHILL build a custom AI agent or RAG system for my business? Yes. This internal project is a “proof of work” that directly showcases our expertise in this area. We can design and build secure, custom AI solutions that connect to your specific company data—whether it’s codebases, document libraries, or databases. We will architect the right solution (local, cloud, or hybrid) to meet your exact security, performance, and business needs.

5. What is the difference between our “AI-enabled Team Augmentation” and “Agents as a Service (AaaS)”? AI-enabled Team Augmentation is about scaling your team. We provide our skilled developers and technical experts, who bring their AI knowledge with them, to integrate seamlessly into your existing projects and accelerate your delivery.

Agents as a Service (AaaS) is about scaling your operations. We design, build, and deploy autonomous AI agents that act as a “digital workforce” to handle complex tasks and entire business processes for you, freeing up your team to focus on strategy.

WRITTEN BY

Runninghill Software Development